The landscape of biomedical research

Posted on: 16 August 2023

Preprint posted on 25 May 2023

Article now published in Patterns at http://dx.doi.org/10.1016/j.patter.2024.100968

ChatGPT identifies gender disparities in scientific peer review

Posted on:

Preprint posted on 19 July 2023

Article now published in eLife at https://doi.org/10.7554/eLife.90230.1

Artificial Intelligence can map the landscape of (biomedical) research – and spots gender bias too!

Selected by Reinier ProseeCategories: scientific communication and education

Introduction

Though it may have begun in the 1950s, the fact that we’re living in the era of artificial intelligence has really started to dawn on (most of) us since the introduction of AI tools such as ChatGPT and Google Bard. Though the rapid acceleration in AI innovation can be a source of trepidation and anxiety, one can hardly ignore the wealth of exciting opportunities that arise from it. These can, or already do, drive important new developments in fields like medicine, finance, transportation, and robotics, among others.

In the world of scholarly publishing, the rise of AI can also have a profound impact on existing workflows. Especially with the ever-growing volume of available data and (online) publications, we may come to rely on AI to help us keep oversight and evaluate and curate the existing literature. Interestingly, a recent preprint from Rita González-Márquez and colleagues shows that we can already use AI to spot trends in the publishing landscape at large.

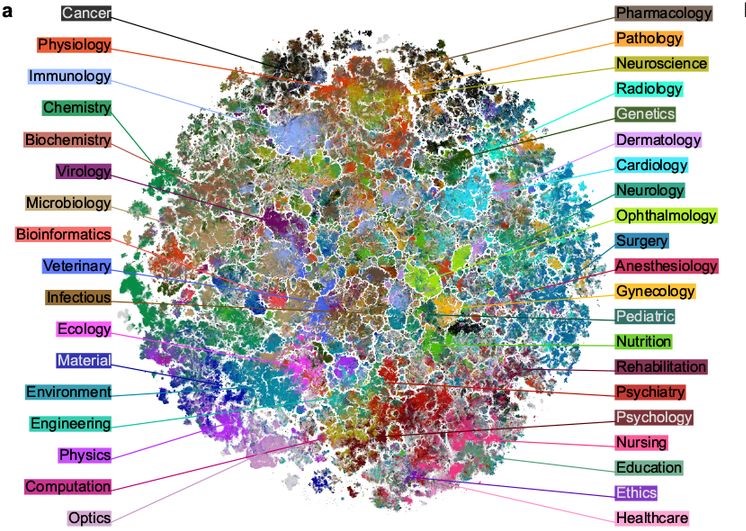

In this recent preprint, an AI-based large language model (PubMedBERT) was used to go through 21 million English abstracts from the PubMed database in order to map the landscape of biomedical research. In doing so, the authors ended up with a (rather stunning) interactive atlas that allowed them to explore many different facets of the biomedical sciences’ publishing scene. These include the rise of research trends, retracted paper-mill papers, as well as gender disparities in the (biomedical) research landscape.

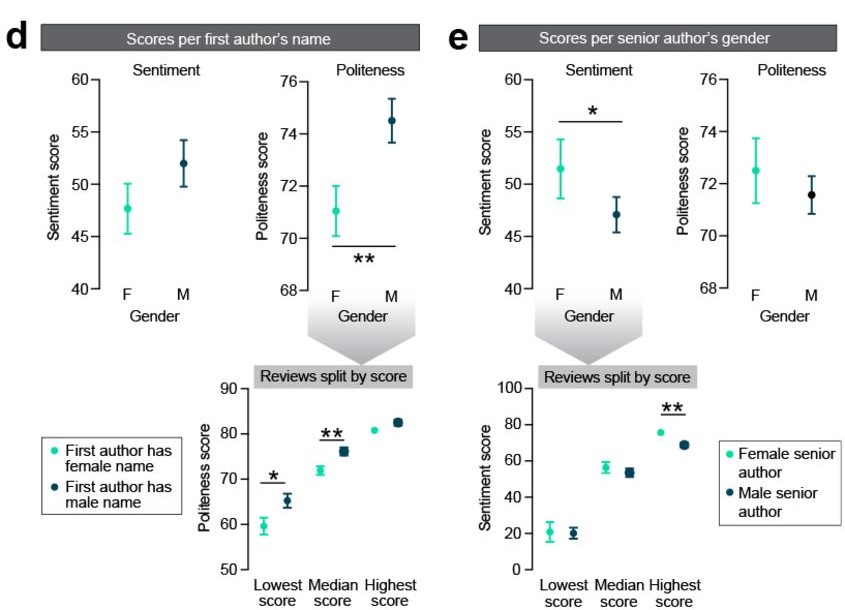

On this note, another preprinted study used OpenAI to analyse gender disparities in peer review specifically. The author, Jeroen P. H. Verharen, asked ChatGPT to digest 200 peer review reports from neuroscience papers accepted for publication in Nature Communications between 2022-2023. He then asked to have them scored based on sentiment and politeness, uncovering a gender bias in peer reviewing. Another example of how AI can inform and help us reflect on our current publishing practices.

Key Findings (captured with the help of ChatGPT!)

The landscape of biomedical research

- Atlas Interpretation and Structure:

- The 2D atlas produced as part of this study revealed the global organization of biomedical literature.

- Related disciplines were located near each other, showing the connection between them.

- The map could also reveal the evolution of research topics over time.

- Clusters on the map represented papers on narrow, well-defined topics (like COVID-19; see below).

2. Uniqueness of Covid-19 Literature:

- The atlas highlighted the unique isolation of Covid-19-related papers within a distinct cluster.

- The Covid-19 cluster exhibited an inner structure with subtopics like mental health, epidemiology, immunology, etc.

3. Changing research trends within disciplines:

- The atlas showed changing trends within disciplines, demonstrated using neuroscience as an example.

- The 2D atlas revealed the shifting focus of neuroscience from molecular to cognitive and computational aspects over time.

4. Uptake of Machine Learning (ML):

- The authors used the atlas to study the adoption of machine learning across biomedical fields.

- Different ML techniques were prominent in different areas, such as deep learning for image analysis.

- The uptake of ML techniques varied across disciplines, with some adopting ML earlier than others.

5. Gender Disparities in Publishing:

- Female authors were distributed unevenly across disciplines, with variations in senior authorship.

- For 40.7% of all papers, gender could be inferred. Looking specifically at these papers, 42.4% of first authors and 29.1% of last authors were female.

- The study found an increase in the proportion of female authors over time.

6. Detecting Suspicious Literature:

- The atlas was used to identify regions with a high concentration of retracted papers. These related to cancer drugs, marker genes, and microRNAs.

- The map’s similarity-based approach helped identify clusters that may require further investigation for fraudulent content.

ChatGPT identifies gender disparities in scientific peer review

- Sentiment and Politeness Distribution:

- Most reviews (90.1%) had positive sentiment, while 7.9% were negative, and 2% were neutral.

- Nearly all reviews (99.8%) were deemed polite by the algorithm, with only one review considered rude.

- There was a strong relationship between the sentiment and politeness scores, indicating that more positive reviews tend to use more polite language.

2. Consistency Across Reviewers:

- An investigation into the consistency of sentiment and politeness scores across reviewers revealed very low, if any, correlation in sentiment scores.

- Reviewers showed high levels of disagreement in their favourability assessments, suggesting subjectivity in the peer review process.

3. Exploring Disparities:

- No significant effects were observed between sentiment and politeness scores and subfields of neuroscience, senior authors’ institutional affiliation continents, or institutional prestige (QS World Ranking).

- However, first authors with female names received more impolite reviews, though sentiment scores were not affected.

- Female senior authors received higher sentiment scores (more favourable reviews) without a difference in politeness.

- No interactions were observed between genders of first and senior authors on sentiment and politeness scores.

What I like about these preprints

Even while preparing this preLight, I am reminded of the potential of OpenAI in fulfilling certain roles within the publishing ecosystem. It can provide you with a summary of any preprint in the time that you’ve hardly read its first sentence. Still – while reading both preprints discussed here in more detail – I realised that ChatGPT may get things right most of the time, but certainly not always (especially when things are more nuanced).

It begs the question of how AI can and should then be used in the publishing ecosystem. The two preprints described here present a very creative angle, as they use AI to uncover larger trends within the publishing industry. Something that can be invaluable in deciding on new policies and practices. It will be exciting to think that more of these studies will follow – studies that hold up a mirror to help us reflect on where we stand and where we could and perhaps should be going.

Questions for the authors:

Rita González-Márquez et al.:

- Having a 2D map of biomedical research, what impact do you think it can have on policy makers, funding bodies, and research institutions?

- The 2D map shows research trends evolving in the recent past, but will there also be a way to predict future trends?

- As you mention, the gender inference model used in this study has clear limitations. It managed to obtain inferred genders for 40.7% of all included papers. How can this inference model be improved for future studies?

- Do you expect that in other research areas, a similar 2D map will be as informative as the one you present here?

Jeroen P. H. Verharen

- You mention that there probably is an over-representation of positive scores in your analysis – for a potential follow-up study, how would you assemble a perhaps more representative collection of peer review reports?

- Could you speculate on ways in which we can more effectively standardise the current peer review process?

- How do you think OpenAI can help us address weaknesses in our current publishing ecosystem, perhaps beyond the scope of scientific peer review?

Sign up to customise the site to your preferences and to receive alerts

Register hereAlso in the scientific communication and education category:

DNA Specimen Preservation using DESS and DNA Extraction in Museum Collections: A Case Study Report

Daniel Fernando Reyes Enríquez, Marcus Oliveira

Kosmos: An AI Scientist for Autonomous Discovery

Roberto Amadio et al.

Identifying gaps between scientific and local knowledge in climate change adaptation for northern European agriculture

Anatolii Kozlov

(No Ratings Yet)

(No Ratings Yet)