A depth map of visual space in the primary visual cortex

Posted on: 18 November 2024

Preprint posted on 30 September 2024

How do animals sense depth? Work from Znamenskiy group (@petrznam) reveals 3D depth-selective responses in the primary visual cortex.

Selected by Wing Gee Shum, Phoebe ReynoldsCategories: animal behavior and cognition, neuroscience

Background and Introduction

Depth perception is crucial for animals to navigate, avoid danger, and interact effectively with their surroundings. Our visual system extracts three-dimensional (3D) information from two-dimensional (2D) retinal images, but the neural connections and properties underlying depth perception remains poorly understood.

Computer vision systems often use “depth maps” derived from 2D images to estimate distances in a scene, raising the question of whether the mammalian visual cortex might produce similar maps. Depth perception in mammals combines binocular and monocular cues. In rodents, which have limited binocular overlap, monocular cues play a key role in establishing and understanding depth perception. One such monocular cue is motion parallax, which is where objects at different distances move at different speeds relative to the observer as a source of depth information (Nadler et al., 2008; Kim et al., 2015; Parker et al., 2022). Therefore, understanding how the brain encodes depth through motion parallax in V1, the primary visual cortex, could shed light on the broader mechanisms of depth perception, and enhance our knowledge of visual processing in both artificial and biological systems.

This preprint by He and colleagues investigates whether the primary visual cortex in mice encodes a depth map of the visual scene from motion parallax, and how neural activity integrates self-motion signals along with visual cues to help achieve this. Here, the authors focus on locomotion-related modulation and how depth selectivity is distributed across the visual field.

Key findings

1. Depth selectivity in V1 is generated from motion parallax

Firstly, the researchers designed a virtual reality (VR) environment for head-fixed mice, where mice had to navigate through an environment with motion parallax as a depth cue. The activity of excitatory neurons in L2/3 of V1 was recorded using two-photon calcium imaging as the mice navigated through the VR environment, creating different varied optic flow speeds dependent on the distance of the virtual objects.

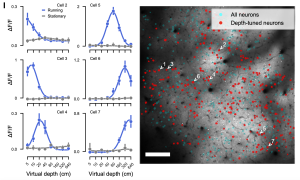

In this context, a significant proportion (51.4%) of V1 neurons showed a depth-selective response. These neurons were tuned to specific virtual depths and their responses spanned the full range of virtual depths, from 5cm to 640cm. Interestingly, this depth selectivity was only present when the mice were moving, indicating that locomotion is essential for generating depth-selective responses (Figure 1). With motion parallax as a depth cue in the VR set-up, the study suggests that this can drive the V1 neurons to generate a depth map of the visual scene as the animal moves, indicating these self-motion cues are crucial for depth estimation.

2. Depth selectivity is formed from the integration of optic flow and locomotion signals

Next, the authors tried to determine whether depth selectivity could be explained by optic flow, locomotion, or a combination of these two factors. To test how optic flow and locomotion are integrated, they evaluated five models: pure optic flow, pure running speed, and the linear summation, conjunction, or ratio of both.

The authors found that the conjunctive model, which proposes a specific combination of optic flow and running speed, reliably outperformed models which were based solely on optic flow, running speed, or their linear summation (Figure 2). This suggests that V1 neurons integrate self-motion as well as visual motion to help encode depth through motion parallax. Conjunctive coding facilitates V1 neurons in estimating depth based on the relative motion of the observer, which is essential for navigating an ever-changing and complex environment.

3. Closed loop coupling of optic flow and locomotion enables accurate depth representation

The authors then asked whether the closed loop coupling of optic flow and locomotion is required for conjunctive coding and accurate depth representation in the primary visual cortex. They observed similar neuronal responses in closed-loop (the mouse’s movement directly determining the optic flow) and open-loop trials (external pre-recorded trajectory) suggesting that closed-loop coupling is not required for the conjunctive coding of optic flow and locomotion. However, the accuracy of depth representation decreased in open-loop conditions, which shows that closed-loop coupling is necessary for an accurate representation of depth as it aligns current optic flow and locomotion-related modulation. Real-time integration of movement and optic flow significantly improves depth perception, indicating the importance of sensory feedback to infer depth.

4. V1 neurons have 3D receptive fields

The researchers were interested in whether the depth-selective neurons in V1 responded to visual stimuli at specific locations on the retina and at specific virtual depths, indicating 3D receptive fields. They found that the majority (66.3%) of depth-selective neurons had 3D receptive fields and would respond to specific retinotopic locations and depths. These results support the idea that V1 neurons encode the position of visual stimuli and combine spatial location with depth information to create a complex, 3D map of the visual environment.

5. There is a non-homogenous distribution of depth selectivity across V1

Finally, it is important to understand the distribution of the depth-perceptive neurons, due to the implications of topographic mapping. The authors found that depth-selective neurons were not uniformly distributed across V1, with nearby neurons often preferring different depths. In general, there was an overrepresentation of near depths in the upper lateral visual field, while far-preferring neurons were more prominent in the lower visual field. This mapping suggests a possible functional specialisation, with different regions of V1 encoding different depths, and combining together to create a comprehensive depth map. This pattern may prioritise visual processing, potentially offering ecological advantages, such as aiding the animal in threat detection.

Why did we choose this preprint?

We chose this preprint because it is an exciting paper that refines our understanding of depth perception mechanisms in the brain. The paper establishes a relationship between V1 neurons and depth selectivity, which shifted our perspective on the these neurons as it made us realise that their role in 2D feature extraction is complex and plays an integrative role in 3D depth perception. We are excited that the conclusions of this study have the potential to extend beyond basic neuroscience, as understanding how the brain encodes depth can inform the development of more sophisticated artificial visual systems and be beneficial for approaches in robotics in building future computer vision models.

References

Nadler, J.W., Angelaki, D.E., and DeAngelis, G.C. (2008). A neural representation of depth from motion parallax in macaque visual cortex. Nature, 452(7187):642–645. doi:10.1038/nature06814.

Kim, H.R., Angelaki, D.E., and DeAngelis, G.C. (2015). A functional link between MT neurons and depth perception based on motion parallax. Journal of Neuroscience, 35(6):2766–2777. doi: 10.1523/jneurosci.3134-14.2015.

Parker, P.R.L., Abe, E.T.T., Beatie, N.T., Leonard, E.S.P., Martins, D.M., Sharp, S.L., Wyrick, D.G., Mazzucato, L., and Niell, C.M. (2022). Distance estimation from monocular cues in an ethological visuomotor task. eLife, 11:e74708. doi: 10.7554/eLife.74708.

doi: https://doi.org/10.1242/prelights.38925

Read preprintSign up to customise the site to your preferences and to receive alerts

Register hereAlso in the animal behavior and cognition category:

Cannibalism as a mechanism to offset reproductive costs in three-spined sticklebacks

Tina Nguyen

Morphological variations in external genitalia do not explain the interspecific reproductive isolation in Nasonia species complex (Hymenoptera: Pteromalidae)

Stefan Friedrich Wirth

Trade-offs between surviving and thriving: A careful balance of physiological limitations and reproductive effort under thermal stress

Tshepiso Majelantle

Also in the neuroscience category:

PPARδ activation in microglia drives a transcriptional response that primes phagocytic function while countering inflammatory activation

Isabel Paine

The lipidomic architecture of the mouse brain

CRM UoE Journal Club et al.

Self-renewal of neuronal mitochondria through asymmetric division

Lorena Olifiers

preLists in the animal behavior and cognition category:

Biologists @ 100 conference preList

This preList aims to capture all preprints being discussed at the Biologists @100 conference in Liverpool, UK, either as part of the poster sessions or the (flash/short/full-length) talks.

| List by | Reinier Prosee, Jonathan Townson |

9th International Symposium on the Biology of Vertebrate Sex Determination

This preList contains preprints discussed during the 9th International Symposium on the Biology of Vertebrate Sex Determination. This conference was held in Kona, Hawaii from April 17th to 21st 2023.

| List by | Martin Estermann |

Bats

A list of preprints dealing with the ecology, evolution and behavior of bats

| List by | Baheerathan Murugavel |

FENS 2020

A collection of preprints presented during the virtual meeting of the Federation of European Neuroscience Societies (FENS) in 2020

| List by | Ana Dorrego-Rivas |

Also in the neuroscience category:

November in preprints – DevBio & Stem cell biology

preLighters with expertise across developmental and stem cell biology have nominated a few developmental and stem cell biology (and related) preprints posted in November they’re excited about and explain in a single paragraph why. Concise preprint highlights, prepared by the preLighter community – a quick way to spot upcoming trends, new methods and fresh ideas.

| List by | Aline Grata et al. |

October in preprints – DevBio & Stem cell biology

Each month, preLighters with expertise across developmental and stem cell biology nominate a few recent developmental and stem cell biology (and related) preprints they’re excited about and explain in a single paragraph why. Short, snappy picks from working scientists — a quick way to spot fresh ideas, bold methods and papers worth reading in full. These preprints can all be found in the October preprint list published on the Node.

| List by | Deevitha Balasubramanian et al. |

October in preprints – Cell biology edition

Different preLighters, with expertise across cell biology, have worked together to create this preprint reading list for researchers with an interest in cell biology. This month, most picks fall under (1) Cell organelles and organisation, followed by (2) Mechanosignaling and mechanotransduction, (3) Cell cycle and division and (4) Cell migration

| List by | Matthew Davies et al. |

July in preprints – the CellBio edition

A group of preLighters, with expertise in different areas of cell biology, have worked together to create this preprint reading lists for researchers with an interest in cell biology. This month, categories include: (1) Cell Signalling and Mechanosensing (2) Cell Cycle and Division (3) Cell Migration and Cytoskeleton (4) Cancer Biology (5) Cell Organelles and Organisation

| List by | Girish Kale et al. |

May in preprints – the CellBio edition

A group of preLighters, with expertise in different areas of cell biology, have worked together to create this preprint reading lists for researchers with an interest in cell biology. This month, categories include: 1) Biochemistry/metabolism 2) Cancer cell Biology 3) Cell adhesion, migration and cytoskeleton 4) Cell organelles and organisation 5) Cell signalling and 6) Genetics

| List by | Barbora Knotkova et al. |

April in preprints – the CellBio edition

A group of preLighters, with expertise in different areas of cell biology, have worked together to create this preprint reading lists for researchers with an interest in cell biology. This month, categories include: 1) biochemistry/metabolism 2) cell cycle and division 3) cell organelles and organisation 4) cell signalling and mechanosensing 5) (epi)genetics

| List by | Vibha SINGH et al. |

Biologists @ 100 conference preList

This preList aims to capture all preprints being discussed at the Biologists @100 conference in Liverpool, UK, either as part of the poster sessions or the (flash/short/full-length) talks.

| List by | Reinier Prosee, Jonathan Townson |

2024 Hypothalamus GRC

This 2024 Hypothalamus GRC (Gordon Research Conference) preList offers an overview of cutting-edge research focused on the hypothalamus, a critical brain region involved in regulating homeostasis, behavior, and neuroendocrine functions. The studies included cover a range of topics, including neural circuits, molecular mechanisms, and the role of the hypothalamus in health and disease. This collection highlights some of the latest advances in understanding hypothalamic function, with potential implications for treating disorders such as obesity, stress, and metabolic diseases.

| List by | Nathalie Krauth |

‘In preprints’ from Development 2022-2023

A list of the preprints featured in Development's 'In preprints' articles between 2022-2023

| List by | Alex Eve, Katherine Brown |

CSHL 87th Symposium: Stem Cells

Preprints mentioned by speakers at the #CSHLsymp23

| List by | Alex Eve |

Journal of Cell Science meeting ‘Imaging Cell Dynamics’

This preList highlights the preprints discussed at the JCS meeting 'Imaging Cell Dynamics'. The meeting was held from 14 - 17 May 2023 in Lisbon, Portugal and was organised by Erika Holzbaur, Jennifer Lippincott-Schwartz, Rob Parton and Michael Way.

| List by | Helen Zenner |

ASCB EMBO Annual Meeting 2019

A collection of preprints presented at the 2019 ASCB EMBO Meeting in Washington, DC (December 7-11)

| List by | Madhuja Samaddar et al. |

SDB 78th Annual Meeting 2019

A curation of the preprints presented at the SDB meeting in Boston, July 26-30 2019. The preList will be updated throughout the duration of the meeting.

| List by | Alex Eve |

Autophagy

Preprints on autophagy and lysosomal degradation and its role in neurodegeneration and disease. Includes molecular mechanisms, upstream signalling and regulation as well as studies on pharmaceutical interventions to upregulate the process.

| List by | Sandra Malmgren Hill |

Young Embryologist Network Conference 2019

Preprints presented at the Young Embryologist Network 2019 conference, 13 May, The Francis Crick Institute, London

| List by | Alex Eve |

(No Ratings Yet)

(No Ratings Yet)